The S Curve is Here

Investing in a Stochastic Singularity

Vernor Vinge died earlier this year. The guy who coined the term 'singularity' back in the early 90s. Giving a name and 2030 deadline for when he expected the robots to 'wake up.'

Today we're going to talk about investing in the singularity, even though it’s hard and confusing.

Particularly at a moment when both macro and micro trends ought give you pause, amidst the massive bullish backdrop. From a macro perspective we're clearly late cycle. Valuations are high, employment conditions are weakening, and inflation looks to have bottomed. Giving the Fed a possible Sophie's Choice in a couple of months between falling employment and rising inflation, that is unless Trump can unleash a wave of optimism/investment or Chinese stimulus actually restarts the engine. TBD.

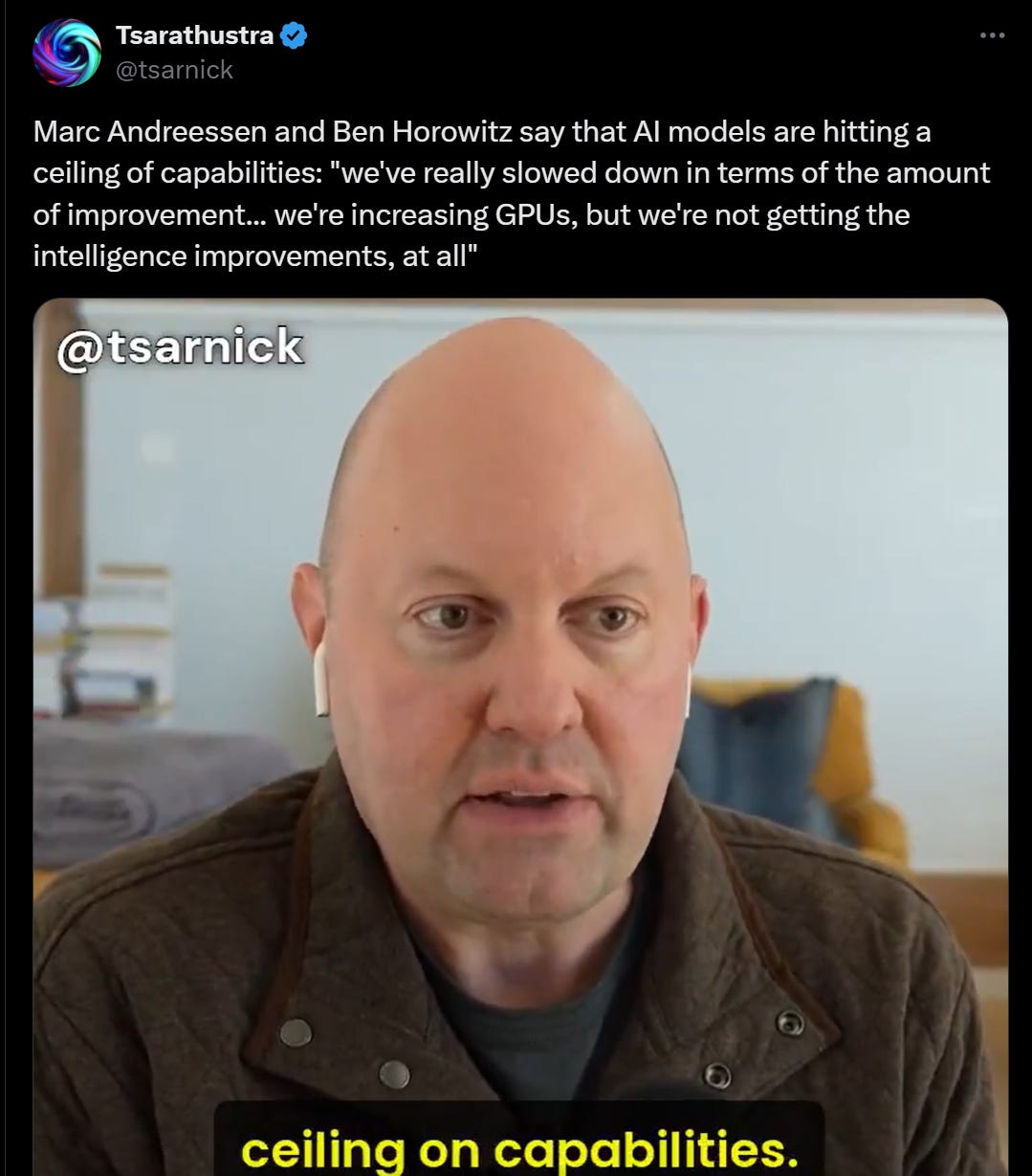

On a micro level, we find ourselves at a very interesting cross roads. We have proof that the value created by this next wave of technology is real and transformational. However many of the biggest investments in capacity have yet to translate into actual revenue. Meanwhile, the fundamental technology powering the latest wave, the so called 'scaling law' underneath modern LLMs looks to be hitting a wall of diminishing marginal returns. The dreaded "S curve."

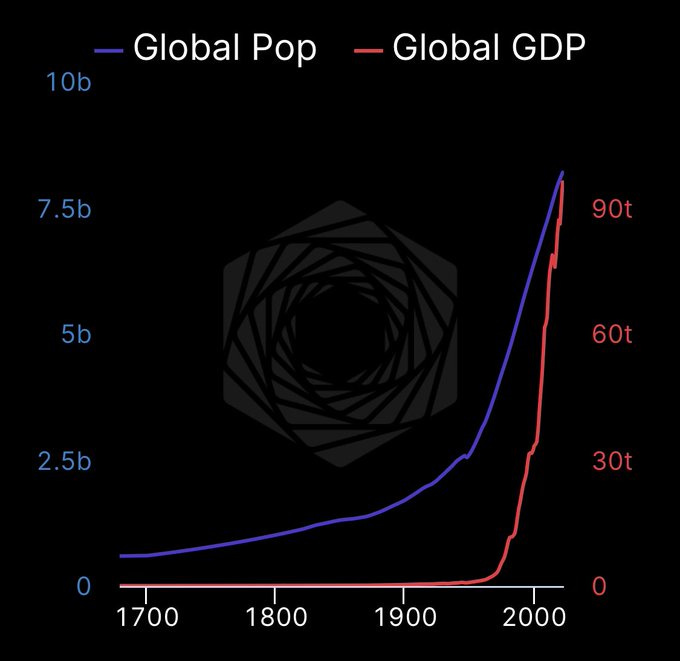

The interesting thing about the singularity is we already kind of living in one, at least if you zoom out far enough.

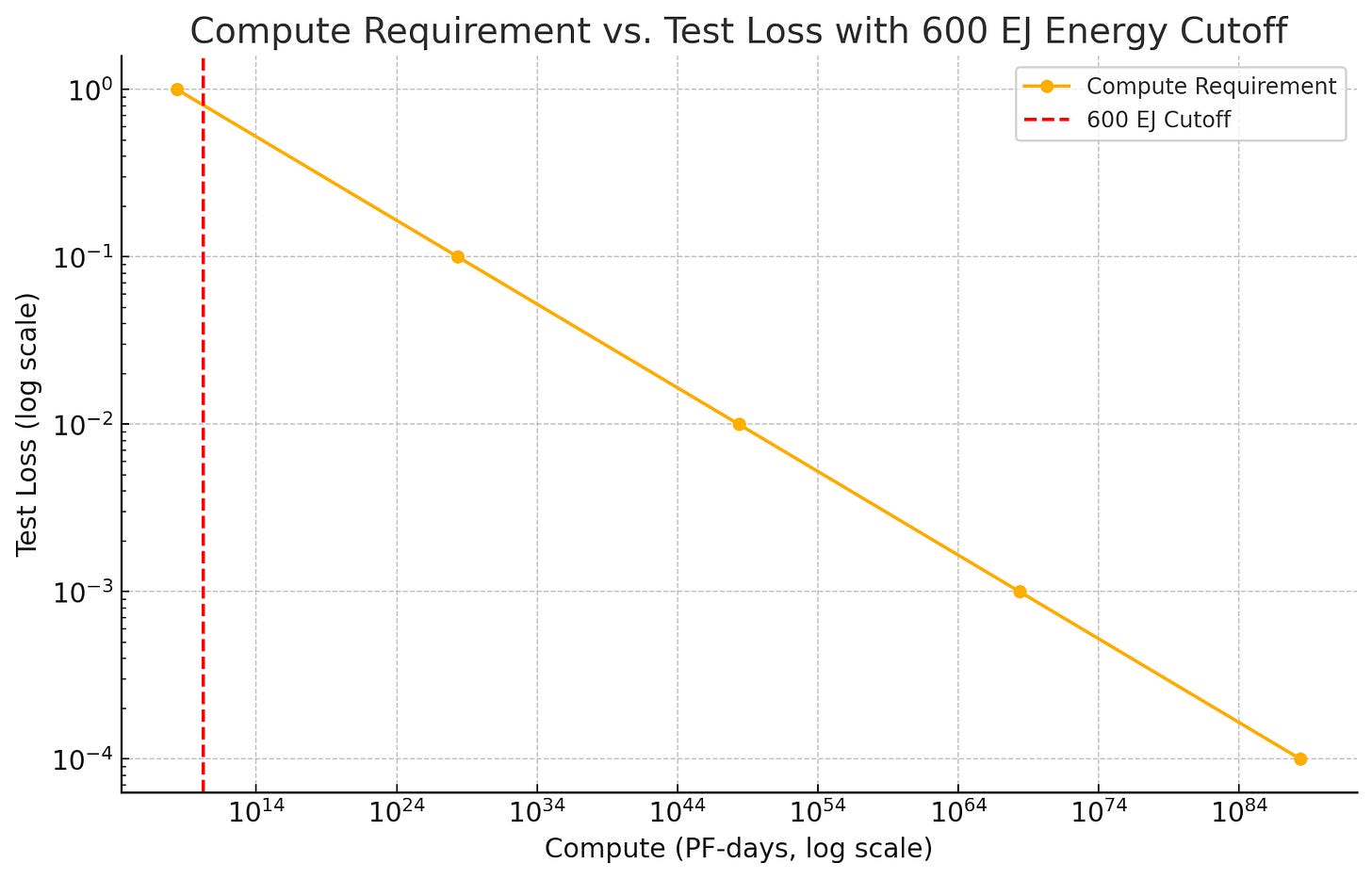

While it’s kind of hard / impossible to get a real time view on global population, you can actually get a decent proxies for the direction of that growth line by looking at stocks. Problem is, zoom in too much, you can lose sight of the trend.

Kind of like the market’s version of the coastline paradox, where the closer you look, the more wiggles you find. What looks like a smooth curve from space becomes increasingly complex, confusing, and uncountable as you zoom in.

So while we know that "drawdowns are going to happen," the question becomes a) when are we headed into one and b) how long it will lasts.

Bringing us to…

The S Curve is Real

Those of you not terminally online might not know, but the scaling law - bigger models = better results - is hitting the wall.

Not hitting the wall in terms of 'can we make the models smarter' but more the rate at which the models improve vs the amount of investment in time/training/money that goes into them.

Or as Vitalik put it, the rate of change from 22-23 was faster than the rate of change from '23 to today.

Which is kind of the definition of deceleration.

Don't believe me, look to the ‘godfather of scaling’ Ilya, who upon raising a billion to make sure his version of superintelligence is REALLY SAFE, came out with an acknowledgement that yes, scaling is hitting a wall.

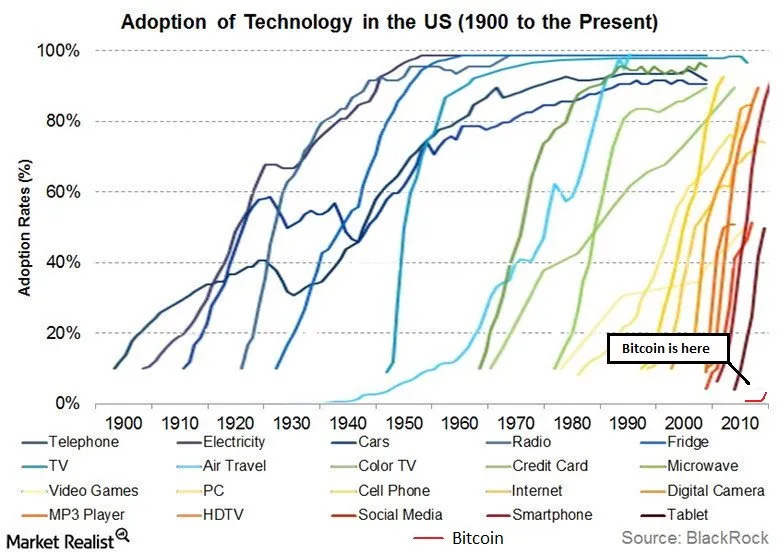

This is a pretty common pattern with consumer tech products. Whether it comes from a limit to the underlying tech or market saturation, eventually, they hit the wall.

After all, how much better is the iPhone 15 than the iPhone 7? Seems like a decade later and somehow I still manage to the end the day running out of battery.

You can see this in sentiment online, where a lot of people reacted to the release of OpenAIs new model ‘o1’ with a bit of a shrug:

Which manifested in skepticism…

…grumbling…

and outright frustration.

Or, as I put it:

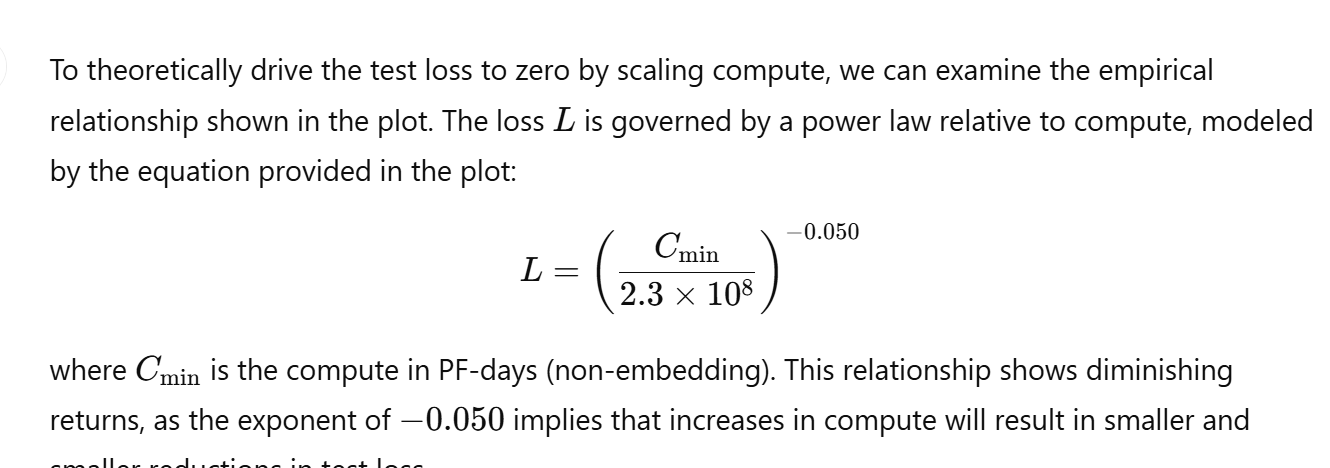

To some extent this was an inevitable conclusion at the heart of scaling, or the idea that larger and larger models, run with more and more data, will bottom out asymptotic to this line defined as the ‘minimum loss curve.’ Aka bigger will always be better.

Why? Well, if you actually take this chart at it’s word and extend the math on how much energy would be required to train a model with 1 or 0 loss, you get wacky numbers

Put another way, yes the model will monotonically improve with more training, but at what cost?

Well, we can calculate that. Based on this formula.

To give a sense for how quickly those numbers get unwieldy, we can estimate what expected model loss would be if we used the entire world’s energy production to train this one model.

Hmm, so you are telling me to get the model below a loss of 1, we would need the entire world’s energy output for one year, running ~50 billion Blackwell GPUs.

And like how much more valuable would that model be, after burning the world for another 0.000001 less loss? Probably not very much.

To get drive loss even further down to 0, we would need multiples of global energy production. (This assuming no data caps, which we know exist. It also begs the question of what ‘zero loss’ actually means. Which is the topic of another ramble.)

Which kind of brings into context Eliezer Yudkowsky’s prediction that one day AI would boil the oceans to feed its compute cooling needs.

Implications for Markets

This sets up an interesting micro story in markets. Everyone knows consumption of compute and energy goes up, but with no pure plays available, most investors end up cramming into the same mag7 tech stocks that have defined this rally.

This crowding mechanically drives down realized correlation and implied volatility - basically making portfolio insurance look cheap when it starts to make sense.

A topic we covered this summer.

Time to Hedge?

Today we’re going to take a break from the 100-year time horizon stuff and serve up something tactical.

Look at NVIDIA. Amazing company, transformative tech. But it's priced like every corporate office will need an H100 cluster to check their email. Reality? Most applications just need their knowledge workers to have access to a smart assistant that works with their actual files and business data, not just "write a poem about gold."

The breaking points are visible:

Power grids that can't handle distributed AI load

Cooling systems built for a different era

Urban infrastructure hitting limits

Geographic arbitrage opportunities appearing

But try finding a clean way to trade these themes:

Utilities? Just rates exposure in disguise

Data center REITs? Same story, different wrapper

Infrastructure stocks? Too much unrelated baggage

Ok Campbell, nice story, but what am I supposed to do about it?

Well, unless you are a micro-cap stock picker, the implications are clear. You can look for and identify pipes that will break due to the wave of demand (in a good way) or you can capture the value created by AI directly by developing your own IP. Building it or buying it, we expect more and more people to capture value from the development of AI directly in their life by...just using it.

It also means that many things that sound 'crazy' today might be reasonable tomorrow. If the singularity is made up of a series of S curves, now is the time to identify and invest in technologies and markets that appear just outside of the Overton window.

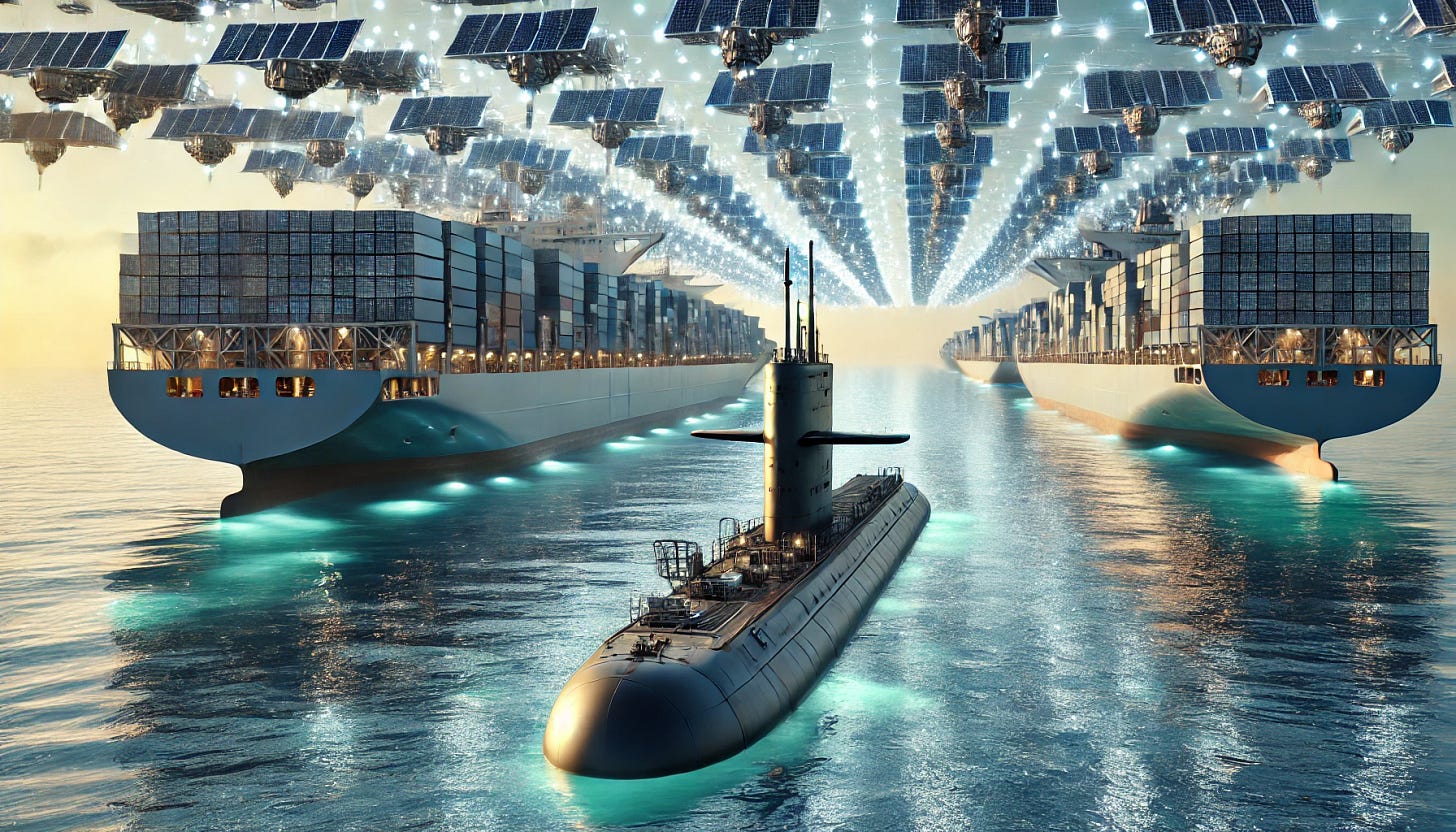

For example, the idea of buying a decommissioned nuclear sub and turning it into a data center.

Data Subs

A world where we need another 100 exajoules of energy for AI is a world where you might want that compute mobile. Something that can float on by and *poof* you are up and running with a xAI scale data center.

How? Well, start by taking a couple of billion to buy and refurbish a decommissioned nuclear submarine, add a container ship full of 40k H100s to draw from the 50MW of surplus energy it throws off, and *poof* world’s first mobile compute system.

Which, based off our very rough back of the envelop math would be competitive cost wise with building a new nuclear plant, or getting your energy from offshore wind, (though a lot depends on how much excess electricity you can squeeze out of the old rigs and you should check my math). Whether that says more about the insane cost of building new nuclear, I will leave to the reader.

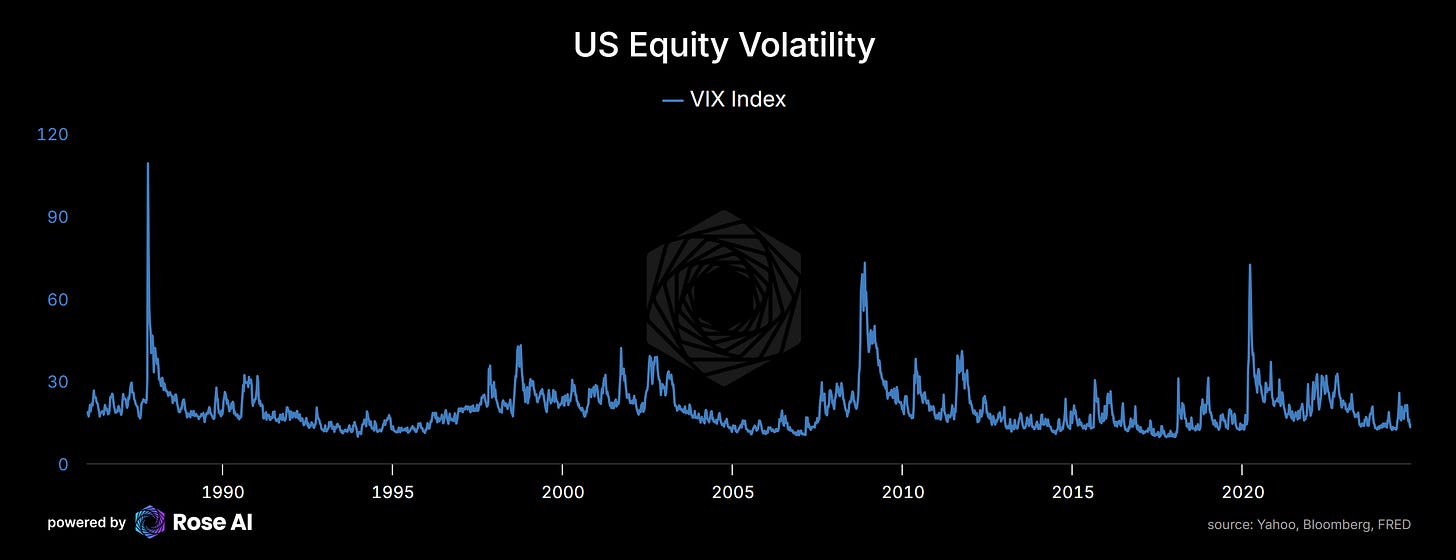

Anyway, if we say a $200m Los Angeles class sub throws off 50MW, that gives you power for about 42k H100s. Not bad, would put you in Google territory by last count:

Which, by way of context, you could run for about a month, before going over the 10^26 FLOP compute limit outlined in California AI legislation like SB1047!

Which brings up an added benefit of data subs, when you go over the caps, you can always make a speedy getaway.

P.S. If anyone from the Navy is reading this, we should talk.

[Standard disclaimers about not actually attempting to acquire nuclear submarines apply. This is what happens when you let traders do infrastructure planning.]

This is bloody fantastic, Alex! Give me a shout when you need the last 100k for a Trafalgar-Class!

Nuclear subs are the OG SMRs!