Why eating meat and worrying about AGI is morally inconsistent.

Or how I learned to stop worrying and start making smarter machines.

Take a minute to ask yourself, “why is it moral for me to eat another animal?”

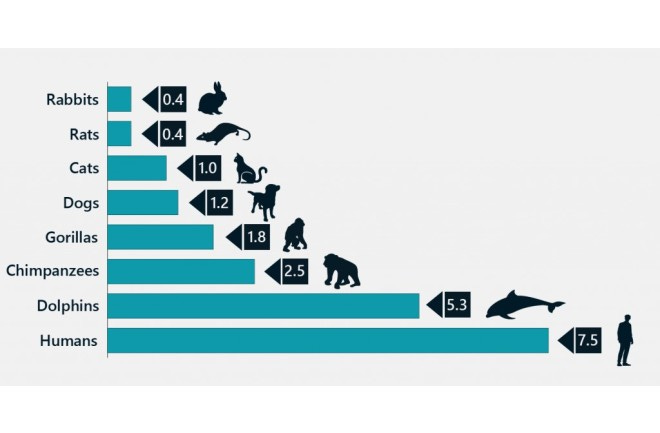

Most arguments for eating meat (or wearing fur etc.) boil down to 'we are smarter than them, thus humans aught derive radically more moral weight in society than animals.

This argument also extends to why we don't give children or all humans full legal rights.

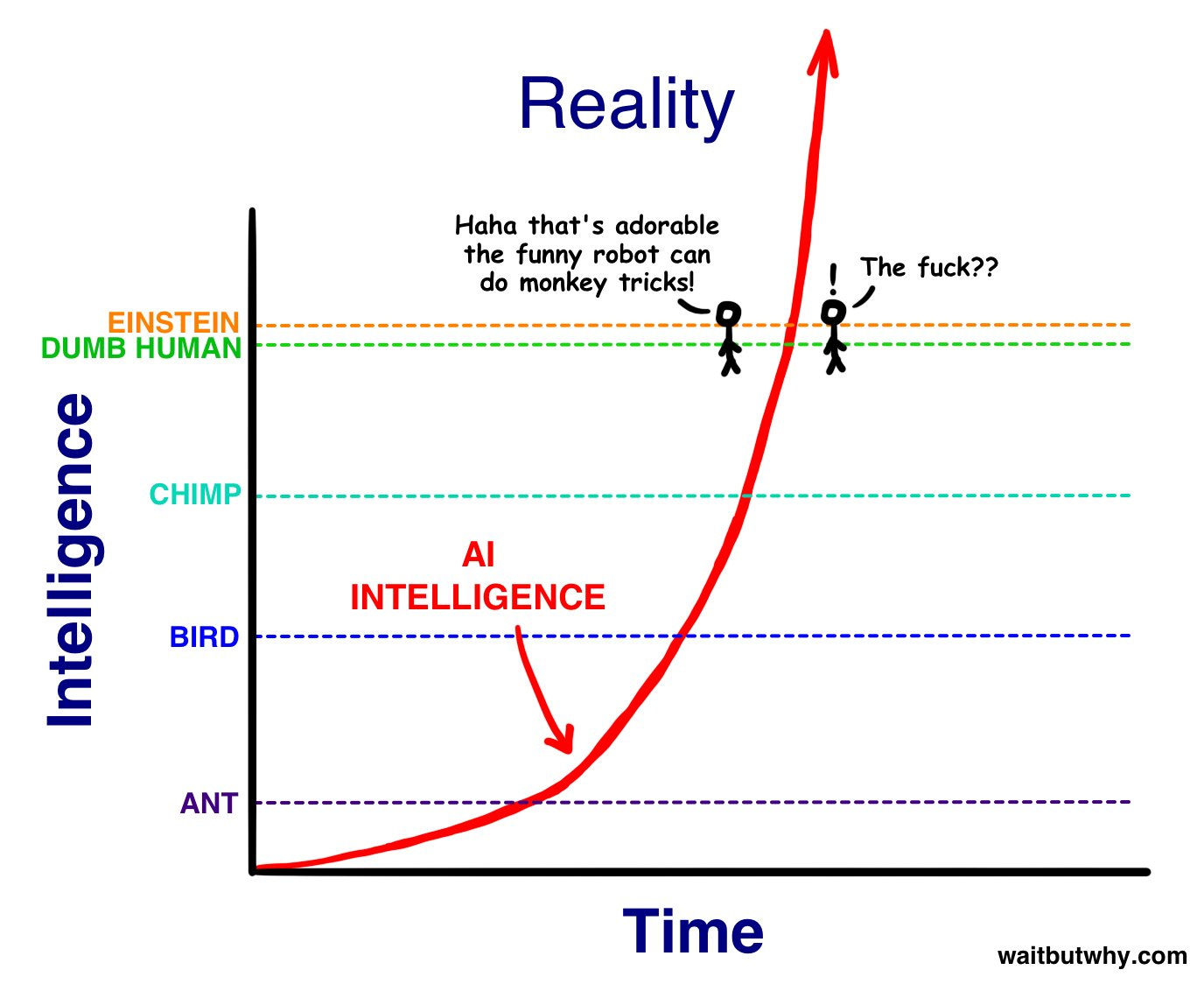

Stands to reason, that any AGI capable of catching up to human intelligence will likely surpass it.

Meaning, if there is AGI, it will be smarter than us.

But recall, if you eat meat, by your own moral framework, smarter things deserve more rights!

If it’s ok to eat rabbits because they are dumber than you, on what basis do you deserve more moral consideration than a robot with 100x your intellectual capacity?

Thus - by most people’s own moral calculus - any AGI with more intellectual capacity than humans actually deserves more moral weight than the humans who make it.

This is where @willmacaskill's arguments about the future come in.

By his logic, if you care about all humans, you aught to also care about all the humans ‘yet to be born.’

Utility in the Future ~ Utility Today

Combining this moral framework of “longtermism” with a) the likely path ahead for intelligent machines, and b) our existing moral frameworks linking rights to intellectual capacity leads to some interesting results…

In particular, you are left with the following, potentially counter-intuitive conclusions.

The AGIs developed in the future only aught have moral weight, but potentially more moral weight than the humans who create it.

Intelligent beings (inclusive of both humans and machines) in the future deserve moral weight today.

These two principles interact. Meaning, if you eat meat, not only aught you not be afraid of intelligent machines, but you may actually inherit a responsibility for bringing them into being.

In the future, it’s build robots, or be replaced by them.

There is no other way. And maybe, by our own moral thinking, that’s a good thing.

Happy weekend.

Note this piece was originally adapted from a speech I gave at the 2019 Altius conference at the University of Oxford. It was also posted earlier this summer as a thread on twitter. Follow us there for this kind of work as and when it comes out.

Disclaimers