How to Regulate AI

Before we can hold machines responsible for their actions, the humans behind them aught be liable.

Well, the people are united.

Time to regulate AI.

Some worry about getting replaced by it.

Some worry about getting discriminated by it.

Some worry about it’s use by less-liberal regimes, misaligned to our values.

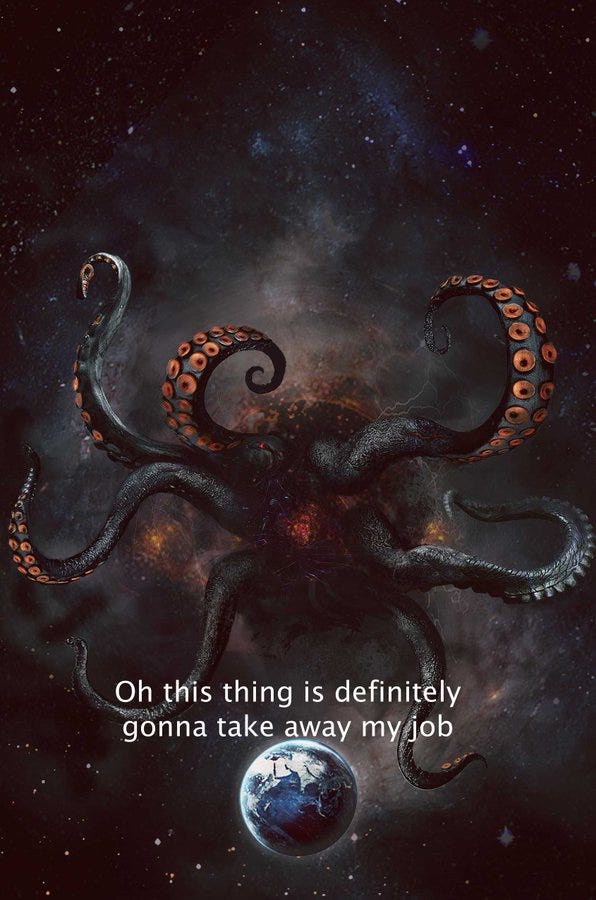

Some see much more sinister events on the horizon.

Me, I’m just trying to get a solid definition, of:

What the heck IS… AI?

And how can we regulate something, we can’t, as a society, even define.

Is it a certain number of GPUs? LLMs with parameters above a certain size? A certain amount of data input and then…foom? Chatbots that don’t suck?

How can we decide what AI can and cannot do, when we can’t even cleanly define it from the rest of technology?

What do do?

So rather than focus on questions like “how intelligent will the machine need to be to decide to roasts us for our H20 content”

Let’s start with this simple framework:

Until we deem the machines sentient, conscious, and capable of essentially the same rights as adult human beings, machines are not legal persons. Legally, they don’t exist in the world outside of physical and digital manifestations of their builders and operators.

Ultimately, in modern liberal democracies, a human individual is responsible for the consequences of their own actions. To the degree they harm others outside of the preexisting bounds defined by law, they are culpable for the consequences of those actions.

Until we deem AIs capable of personhood, they aught be treated as legal extensions of the individuals for whom they are operating on behalf of. Just like a car that’s out of control, an elevator that hasn’t been maintained, or a bullet that ‘left your gun’ accidentally, ultimately the human which set that tool in motion or was negligent in it’s construction/maintenance is culpable for the consequences of that machines operation.

In this way, the question isn’t just does Sam Altman or the other tycoons have skin in the game in terms of equity in various businesses. It’s *which* legal persons - be they humans, corporations, or charities - do we ultimately hold culpable for the good and bad done by their machines.

In short:

Machines don’t exist as legal entities outside of their operators and designers, when the machine goes down, look to the human behind it as the ultimate responsible party.

Aka.

Don’t Trust Machines

In this context, we probably agree with Sam.

Not insofar as we think everyone aught need a license to do research on matrix multiplication, but more that the keys to driving those machines, especially the one’s that have real world connectivity and impact (banking, health, transportation, security, privacy) are going to need to be linked to our identity.

That’s what Sam means when he says license.

Basically, to run these machines, we are going to need to match you to a comprehensive universal unique identifier (UUID).

Which is why Sam’s OTHER idea, is such a monster in waiting…

A unique identifier, for every human on earth.

Coming soon.

Disclaimers